By Humphrey McQueen

Writing in 1977, the ‘father’ of algorithmic analysis, Stanford Professor and recipient of the 1974 Turing Award, Donald E. Knuth, observed that

[t]en years ago, the word ‘algorithm’ was unknown to most educated peoples; indeed, it was scarcely necessary. The rapid rise of computer science, which has the study of algorithms as its focal point, has changed all that; the word is now essential.Knuth went on to acknowledge that there were

several other words that almost, but not quite, capture the concept that is needed: procedure, recipe, process, routine, method, rigmarole. Like these things, an algorithm is a set of rules or directions for getting a specific output from a specific input. … the rules must describe operations that are so simple and well-defined they can be executed by a machine.

He has not made the algorithm sound like the Archangel Gabriel’s announcing the Messiah. Technical dictionaries shake off more of its mystique: ‘A mechanical procedure for solving a problem in a finite number of steps (a mechanical procedure is one which requires no ingenuity) …’[1] An on-line offering is hardly more flattering: ‘An algorithm acts as an exact list of instructions to carry out specified actions step by step.’

Encountering these parameters is a country mile from how some of us are able to write a computer program which, as Knuth goes on to explain, is

the statement of an algorithm in some well-defined [computational] language. Thus, a computer program represents an algorithm, although the algorithm itself is a mental concept which exists independently of any representation. In a similar way, the concept of the number 2 exists in our minds without being written down.[2]

True. But a handful of us are able to write computer programs only because some of our forebears learnt how to write down ‘2,’ or one of its equivalents, such as the Roman ‘II’, as aids to mental processes. From there, savants advanced into fractions, decimals, zero, negative numbers, probability, differential calculus and infinity. In the second quarter of the seventeenth-century, Galileo declared mathematics to be the language of nature, and Descartes bridged geometry and algebra; some 200 years later, a club of Cambridge undergraduates, including Charles Babbage, simplified Newton’s notation of differential calculus. ‘By the middle of the nineteenth-century,’ Marx remarks, ‘a schoolboy could learn the binominal theorem in an hour, an intellectual achievement which had taken humankind centuries to master.’ [3]

Algorithms, programs and computers interact because human reasoning, allied to our free-ranging imaginations, compensate for our limited capacities to compute, which machines surpass to the power of n.

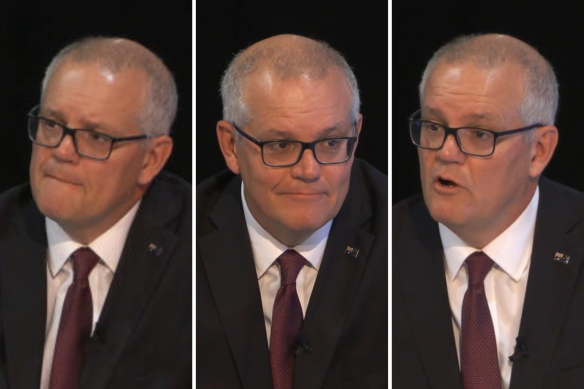

Robocop: That mathematical power did not boot-up one morning and decide to inflict the illegalities of Robodebt on nearly half-a-million of our most vulnerable.[4] A different power was at work. As Treasurer, Robocop-out Morrison enforced an economic policy to drive the ‘undeserving poor’ off the public purse. As one strand in slashing the budget deficit, he threatened the Australian Council of Social Services at its 2016 National Conference that ‘welfare must become a good deal for investors – for private investors. We have to make it a good deal, for the returns to be there.’ Class bias, not a computer program, fuelled the bushwhack.

Knuth argued that ‘an algorithm must always terminate after a finite number of steps.’ Not at Centrelink. Its pursuit of 11,000 Covid-era beneficiaries while corporates clung to $4.6 billion in JobKeeper payments is not a ‘double-standard,’ but standard operating-procedure for any class-riven regime. Each time corporations are caught underpaying wage-slaves, CEOs plead ‘computer error.’ How is it that their machines never get it into their programs to overpay us?

‘Averaging’ the victims’ incomes could have been done by a twelve-year-old with a pocket calculator. The political economy behind Robodebt emphasises why wage-slaves have to explore more exactly how algorithms and programs are being used to generate weapons against our class. Pursuing the range of their applications is imperative if we are to develop responses which take us beyond tactical manoeuvres in order to develop strategies to challenge how agents of the boss-class in state-apparatuses will use every level of machine-intelligence against us.

Starting some 1,000 years back with the fulling-mill to add density and durability to wool, [5] property-owning classes have had technologies designed so as to exact more surplus produce from our capacities to add value, as David Noble documented for U.S. corporations.[6] The same applies to algorithms. A triumphant proletariat will not be able to take either over as they are but we shall need to reprogram them as steps along the road to a classless society.

[1] David Nelson (ed.), Dictionary of Mathematics (London: Penguin, 1998), 7 and 8. The term derives from the name of the Arab mathematician al-Khwarizmi c.750-c.820.

[2] Donald E. Knuth, “Algorithms,” Scientific American, 237, no. 3 (1977): 63.

[3] Karl Marx, Theories of Surplus-Value, Marx-Engels Collected Works, vol. 34 (London: Lawrence & Wishart, 1988), 87.

[4] Nathalie Marechal, “First They Came for the Poor: Surveillance of Welfare Recipients as an Unencumbered Practice,” Media and Communication, 3, no. 3 (2013): 56-67; Saabiq Chowdhury, “Technology is never neutral: Robodebt and human rights an analysis of automation decision-making on welfare recipients,” Australian Journal of Human Rights, 30, no. 1 (2024): 20-40

[5] A. Rupert Hall and N.C. Russell, “What about the Fulling-Mill?,” History of Technology, 6 (1981): 114-5; Leslie Syson, British Water-Mills (London: Bradford, 1965), 63.

[6] David Noble, America by Design Science, Technology and the Rise of Corporate America ( New York: Alfred Knopf, 1977); ????, and his Forces of Production, A Social History of Industrial Automation (New York: Alfred Knopf, 1984; Sigfried Giedion, Mechanisation takes command ( : , 1948).